(BONUS) Torrents

In this chapter we are going to take a second look at how information is transferred over the Internet. Previously we learned about how the application layer (usually HTTP) packages up the useful information, the transport layer (usually TCP) makes sure the information is transferred reliably, and the Internet layer (usually IP) makes sure the information gets where it needs to go. Whenever your web browser loads a web page, all of these protocols work together to get that information onto your computer.

This works great for web pages—even fancy ones like www.weather.com which include lots of images and interactivity. That page only requires about 4 megabytes of data to be transferred over the Internet. That’s chump change these days. This process also works well when you want to download small files like images or songs.

But what if you need to transfer a lot more data? What if you want to download a super-high-definition movie or a huge videogame? Those sorts of files can be tens of gigabytes in size—thousands of times larger than a typical web page. Well now we have a problem, and to see why we need to think about how HTTP/TCP/IP transfers information.

Service

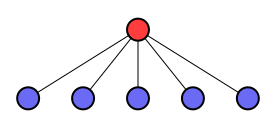

When it comes to transferring information over computer networks, the computers involved are often referred to as “clients” and “servers”.

The servers are the computers with the information and the clients are the computers that want the information.

We can think of these servers and clients just like if they were people in a restaurant. A computer requesting data over a network is like a client entering a restaurant and asking a server for the menu. To make the example concrete, let’s suppose that the menu has ten items on it and this is a really fancy restaurant so the menu isn’t written down, the server just memorizes it and tells it to each client. So the client sits down at the restaurant, waits for the server to come over, and they have this sort of conversation:

CLIENT: What’s on the menu today?

SERVER: We have a selection of ten items.

CLIENT: Let’s hear it, then.

SERVER: First we have octopus a la plancha with farro and a dolop of Greek yogurt

CLIENT: Mmm, sounds fabulous.

SERVER: Second, for contorni we have delicately pan roasted cauliflower with anchovies and a mediterranean salsa verde.

CLIENT: Okay.

SERVER: Third there is…

And so on. Remember that this conversation is an analogy for TCP/IP, so both the client and server take care to ensure that the items of the menu are communicated in order without any missing. This is just fine and dandy if your computer is the only one talking to the server, but what if another bossy customer sits nearby and wants to hear the menu as well?

CLIENT: What’s on the menu today?

SERVER: We have a selection of ten items.

CLIENT: Let’s hear it, then.

BOB: Hey! I want to hear the menu too!

SERVER: First we have octopus a la plancha with farro and a dolop of Greek yogurt

BOB: How many items were on the menu?

CLIENT: That octopus sounds fabulous.

SERVER: Ten items, Bob. The first was octopus a la plancha with farro and yogurt

SERVER: Second, for contorni we have delicately pan roasted cauliflower with anchovies and a mediterranean salsa verde.

CLIENT: Okay.

BOB: I don’t like octopus. What’s the second item?

SERVER: Third there is…

Confusing. Remember that with this analogy, the server has to talk to each client separately (he likes to make them feel special) because you’re really sending packets between computers over the Internet, not speaking in a room. But can you see how at this rate it’s going to take twice as long for you and Bob to both hear the menu? This is a very common problem on the Internet these days: the more people there are who want to get certain information from a server, the slower the server gets. This can be pretty frustrating for all of the Internet users who just want their data and don’t realize that the server is getting swamped with requests.

Sometimes people intentionally flood a server with more requests than it can handle in order to disrupt the service for everyone else. This is called a denial of service (DoS) attack. Imagine 100 people all coming into a restaurant, each spending 10 minutes looking at the menu, and then deciding they don’t want to eat there.

For normal web pages, this usually isn’t much of an issue since web pages require very little data and the Internet is so fast. Usually thousands of people can all access a web server with no noticeable slowdown. But when it comes to downloading huge files, those thousands of people can bring things to a crawl.

There are several workarounds for this problem. Some web servers are very clever and are designed to talk to multiple people simultaneously so that they can communicate with ten people just as fast as they communicate with one. However this is often more expensive to operate, and still has a hard limit. If you can talk to ten people at once, you’re still going to take ten times longer to finish if a hundred people show up.

A similar solution is to have multiple servers all with copies of the same data so that if one server is overloaded, people can be redirected to a different server to get their data (like how a restaurant has multiple servers). But this still has the same problem, because if enough people show up to overload every sever then things will still slow down.

Some websites instead force people into a queue, so that only a few people can access the server at once (like waiting outside a restaurant for a table). This ensures that the server never gets overloaded, but people might still end up waiting a while for their data. So this isn’t a solution either.

It seems like no matter what we do, it always takes longer for people to get their data if more people show up. There’s just no solution.

Peers

Of course there’s a solution. In fact, the exact opposite it possible: the more people there are who want the data, the faster they all can get it!

Let’s start by considering one simple improvement. Suppose eight people show up at once to hear the menu. If it takes a minute for someone to recite the menu, then it’s going to take eight minutes for everyone to get the menu from the server, right? But now suppose the sever starts by telling the entire menu to the first client. Now there are two people who know the menu: the server and the client who just heard it. So the first client can act like a server and repeat the menu to another client. Since there are now two people who know the menu, the next two clients can both hear it at once. After that there would be four people who know the menu, so the four who know can all repeat it to the four who don’t know. Instead of eight minutes, it would only take three.

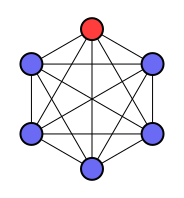

How can we make this process even better? Instead of communicating the whole menu at once to a single person, let the people all communicate with each other simultaneously. There’s no need for an orderly queue with people waiting turns; people can simply shout out “What’s the third item on the menu! Who has it?”, “I know the whole menu! If you need any items, I can tell you!” If the menu items are numbered, people don’t even need to hear them in order. The server can hand out random items to random people in random order and since they’re all sharing amongst themselves eventually everyone will get the whole thing.

This whole process goes even faster if, like the clever web server, each person can talk to multiple people at once. Each person who gets a copy of the menu is now like a new server and can talk to many clients at once to quickly hand out their information, and each newcomer can talk to many servers at once (since each client can now acts like a server) to quickly get the whole menu. This allows the whole process to go even faster than three minutes. It also means the original server could even go away entirely, and people could continue to get the menu since there are now many people to get it from.

This sort of network, where computers share data amongst each other, acting both as clients and servers is called a peer-to-peer (P2P) network.

In particular, the system I describe here is very similar to the BitTorrent protocol. BitTorrent is an application protocol that splits up files into small, independent pieces and allows computers to download these pieces from multiple sources simultaneously while also uploading received pieces to other BitTorrent clients. Each piece is verified with a cryptographic hash to ensure that none of the pieces have been modified by any of the peers. The protocol allows everyone downloading to communicate with each other so that they can easily find people who have the pieces they need.

Clients

BitTorrent is a great example of how different application layer protocols can be. BitTorrent and HTTP are both protocols that can run inside of TCP/IP, but they have completely different behavior. Because of this, your web browser probably doesn’t know how to handle BitTorrent data. To use BitTorrent, you will need to download a program called a “client” that understands the protocol. There are several available, but I recommend Deluge Transmission.

To use your BitTorrent client, you first need to download a torrent file (via regular HTTP). The torrent file describes the data you are going to download via BitTorrent and also tells you how to communicate with the other BitTorrent users downloading the file. You can then give the file to your BitTorrent client and it will start downloading it.

Exercises

- Download and install a BitTorrent client.

- If you want practice with BitTorrent, read on. We will be using it in Part 3.